With a spike in recent major hacks and leaks, AWS S3 has been put in spotlight due to organizations' failures to secure their object storage in the cloud. Just in June of this year, a big leak of US voter data was made public. This happened right after a May leak of French political campaign data. In July Verizon leaked data for 6 million users.

And what happened this this past month? Somebody exposed millions of Time Warner subscriber records. Corporate security meets public cloud.

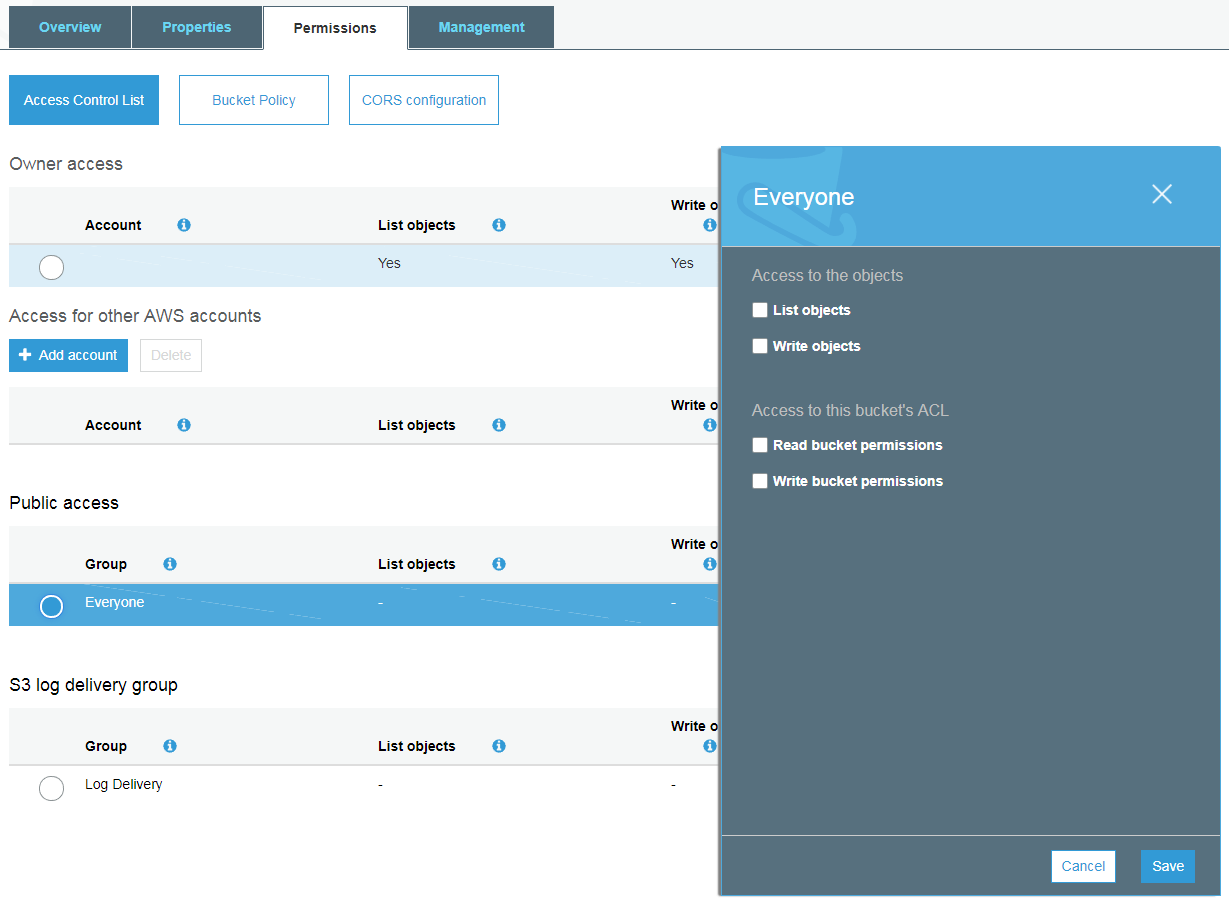

All these leaks came from a public S3 buckets. This is not surprising considering that S3 security can be a bit confusing to novice users as well as seasoned InfoSec professionals. Too many admins confuse ACLs and what they can do and disregard IAM policies because they're "too hard". And that's with Amazon warning you when you make buckets public...

Public access configuration in S3

Let's also not forget that human laziness knows no bounds. Too many times are secure S3 policies relaxed so "everyone" within AWS can get to the data without much thought left to figure out who is "everyone".

In addition, more often than not AWS API keys are leaked by being checked into Github, Bitbucket, and other public source control services. It does not help that many of those API keys lead to users and roles with too many powers enabled in the IAM policies.

This practice has become so big that there are now multiple public search engines dedicated for searching and parsing leaked API keys and secrets.

This all stems from poorly understood security practices revolving around S3 and IAM. This article will help explain the three basic security controls around S3, how they can be tied into IAM wherever possible, and how to keep your cloud data secure.

Granting Access

The following is the access control available in S3:

ACLs:

- ACLs can be used to limit access to buckets to other AWS accounts, but not users within your own account.

- ACLs grant basic read/write permissions and/or make them public.

- You can only set ACLs to provide access to other AWS accounts, yourself, everyone, and for log delivery.

- Both buckets and objects can have ACLs.

Bucket Policies:

- Bucket policies are attached to buckets and set policies on the bucket level. Only buckets can have policies.

- Bucket policies specify who can do what to this particular bucket or set of objects.

- Bucket policies are limited to 20KB in size.

- If you want to set a policy on all the objects within a bucket, you

must use

bucket/*nomenclature. - Objects do not inherit permissions from parent bucket so you have to

go through them and set the permissions yourself or use

bucket/*setting. - Bucket policies include "Principal" element which specifies who can access the bucket.

- Bucket policies can use "Condition" to specify IP addresses that can access this bucket to add more security.

IAM Policies:

- These are good if you have a lot of objects and buckets with different permissions.

- IAM policies are attached to users, groups or roles and specify what they can do on particular bucket.

- IAM policy limits include 2KB for users, 5KB for groups, and 10KB for roles. Compare this to S3 Bucket Policy which is limited to 20KB of data.

Best Practices for Keeping Data in S3 Secure

- Use Multi-Factor Access for Deletes so two factors of authentication

are required to delete an object from S3.

- Remember the following parts of 2-factor authentication:

- Password: something you know.

- Token: something you have.

- Enable versioning of objects. Users will be able to remove objects

but an older version will be kept in S3 which can only be deleted by

the owner of the bucket.

- You can use Lifecycle Rules to help manage when objects get versioned. You will pay a little extra for the storage that you use but this security is worthwhile.

- Remember to review your buckets and objects' permissions regularly.

Check for objects that should not be world-readable.

- Make sure to go through your buckets and objects and verify their permissions. Don't assume that all old objects are still secure.

- Amazon will send you an email if your objects have wide-open permissions.

- Utilize secure pre-signed URLs for letting 3rd party users to upload data to private S3 buckets.

- Scrub your code that utilizes AWS API of any API keys and secrets. Check out git-secrets that can help you do that right before checking in the code.

- This one is not really security related, but more of a performance

related tuning tip - use randomized prefixes for S3 object names.

- This ensures that objects are properly sharded across multiple data partitions. With this, S3 object access will not slow down since not one partition will be hammered for data.

- Remember that in S3, objects are stored in indexes across multiple partitions - just like in DynamoDB.

- Scrambled object/key names can help with obscurity and obfuscation of data.

Comments

comments powered by Disqus